Image -> 3D: What It Can and Can’t Do

Understanding the boundaries of Photogrammetry, GSplats, and NeRFs

One of the most common questions I get when introducing 3D workflows to executives or people new to the space is:

"Wait… can’t I just take a picture or video of the object I want in 3D?"

It’s a fair question and the answer is: Yes… but.

Let’s break it down. Not because you don’t already understand this, but because if you work in 3D, you will be asked. It helps to have a clear, confident response ready.

Can you create a 3D model from a photo?

Yes, absolutely. There are a few methods available today:

Photogrammetry

Gaussian Splatting (GSplats)

Neural Radiance Fields (NeRFs)

These techniques can produce impressive results. I’ve seen photorealistic reconstructions on LinkedIn that look incredible. Under ideal conditions, these approaches are effective—and they’re only improving.

If you want a breakdown of how each one works, I cover that in a separate article

WTF is a Gaussian Splat!?!?

Within the last few weeks, my feeds have been inundated with flashy videos with a new buzz phrase…GAUSSIAN SPLATTING!!! And when I saw it, I believe I reacted in an entirely rational way…I was furious. WTF is this, now!?!?!?

The Dream: One Picture = Full 3D Model

All of these methods above generally involve taking dozens of photos or a video. So the obvious question is, what about just taking one image of something and having AI hallucinate the rest?

The short answer is: not yet. Some AI models are working toward single-image 3D generation, but the results are still limited. Why?

Because AI has to guess. If it’s your unique product…say, a new shoe design or a custom piece of furniture…that guess won’t be accurate. You might get a shoe, but not your shoe.

There are infinite variations, and AI can’t predict the exact geometry, materials, or design intent from a single photo, especially for something that has never existed before.

Technical Limitations

Even with traditional photogrammetry (multiple photos, good lighting, high-res cameras), there are challenges:

Hidden geometry: Think of armpits or the underside of a chair. These are often missed or poorly captured.

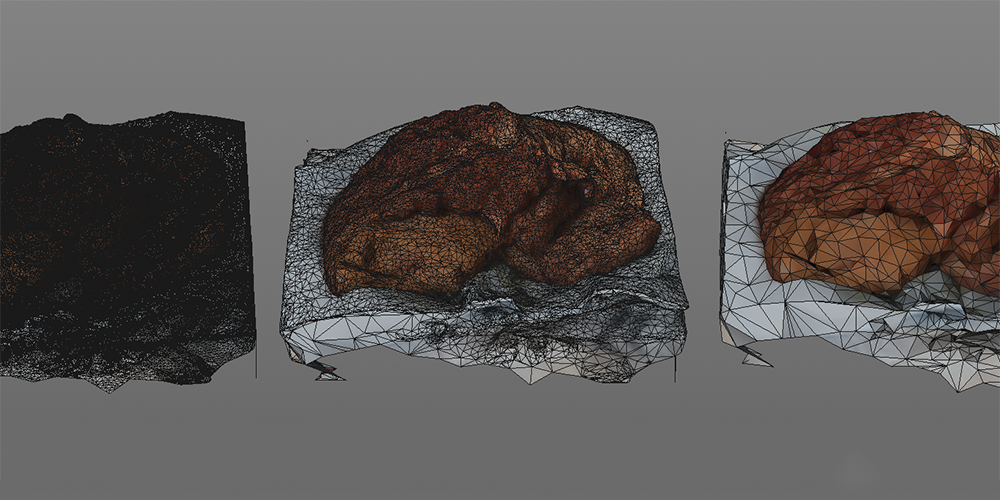

Messy geometry: Outputs usually require cleanup—remeshing, retopology, and removing baked-in shadows.

Performance issues: High-poly scans don’t always translate well to real-time or web-based workflows.

Sure, these issues might improve over time. But they’re not the most important limitation.

The Bigger Problem: Workflow Limitations

Even if we had a magical "one-click photo-to-3D" tool, it would only help with a small slice of what 3D artists actually do.

Most of our work isn’t about replicating existing objects. It’s about visualizing things that don’t exist yet:

A car concept that hasn’t been built

A character still in development

A dress for next season’s collection

You can’t take a picture of something that hasn’t been made. Because it lives in your imagination.

This is the critical gap photo-based 3D tools can’t—and likely won’t—bridge.

Should 3D Artists Be Worried?

Not about this.

These tools are exciting. They’ll make it easier for more people to explore 3D. But they don’t replace ideation, storytelling, or design thinking

Even if a company uses photo-to-3D tech to get a rough model, they’ll still need someone to:

Clean it up

Match materials

Prep it for rendering, animation, or interactivity

Most importantly: Design the next version

This tech might accelerate part of the pipeline, but it doesn’t eliminate the need for creative professionals

Final Thoughts

It’s easy to feel anxious about rapid change—especially when it feels like AI is creeping into every creative space. But in this case, the reality is reassuring:

Image-to-3D workflows are useful—but they’re not a replacement for 3D artists. They serve a narrow function, and they’ll never be a stand-in for imagination, iteration, and storytelling.

And honestly? The more people who engage with 3D, the more they’ll recognize the value of skilled artists who can turn raw data into compelling, production-ready content.

The 3D Artist Community Updates

3D Merch is here and we have a new hoodie!

3D News of the Week

Watch How Cotton Turns into T-Shirts in This Hypnotizing Animation - 80.lv

Epic is making it easier to create MetaHumans - The Verge

Meet MotionMaker, Maya's new animation tool, powered by Autodesk AI! - LinkedIn

Exclusive Trailer: Locksmith Animation’s First Short Film ‘Cardboard’ - Cartoon Brew

Real-Time 3D: The New Frontier In Fashion And Luxury - Interline

3D Tutorial

3D Job Spreadsheet

Link to Google Doc With A TON of Jobs in Animation (not operated by me)

Hello! Michael Tanzillo here. I am the Head of Technical Artists with the Substance 3D team at Adobe. Previously, I was a Senior Artist on animated films at Blue Sky Studios/Disney with credits including three Ice Age movies, two Rios, Peanuts, Ferdinand, Spies in Disguise, and Epic.

In addition to his work as an artist, I am the Co-Author of the book Lighting for Animation: The Visual Art of Storytelling and the Co-Founder of The Academy of Animated Art, an online school that has helped hundreds of artists around the world begin careers in Animation, Visual Effects, and Digital Imaging. I also created The 3D Artist Community on Skool and this newsletter.

www.michaeltanzillo.com

Free 3D Tutorials on the Michael Tanzillo YouTube Channel

Thanks for reading The 3D Artist! Subscribe for free to receive new posts and support my work. All views and opinions are my own!

Thanks for this. From what little experience I have using image to 3D, I’ve found that the resulting 3D object has either way too many polygons to make any minor adjustments too and/or the material data is not easily adaptable to many workflows. Personally I wish there was an Ai that simplified the UV process :)

Thanks for breaking this down in a straight forward way without the hype or the doom. I really appreciate it.